Modular has acquired BentoML ![]()

Over 10,000 organizations rely on BentoML to ship models to production, including 50+ Fortune 500 companies. We’re bringing together BentoML’s cloud deployment platform with MAX and Mojo’s hardware optimization.

BentoML remains open source (Apache 2.0), and we’re doubling down on our open source commitment, with much more coming later this year.

What this means for production AI:

- Code once and run on NVIDIA, AMD, or next-gen accelerators without rebuilding

- Optimization and serving in one workflow

- Deploy with your own infrastructure with modern performance

- Fewer layers, better results

We’re delighted to welcome Chaoyu, Sean, and the entire BentoML team. Together, we’re building the complete AI infrastructure stack.

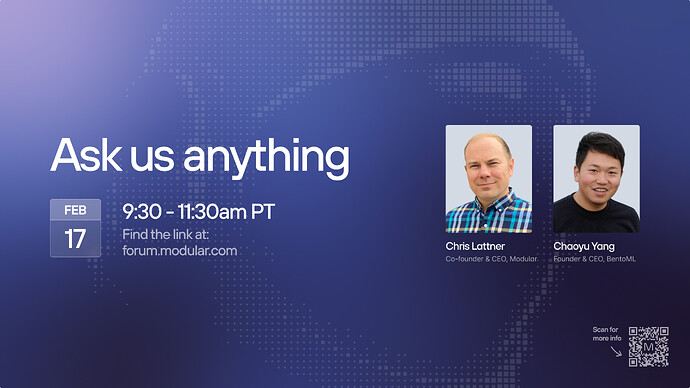

![]() Join us on February 17th at 9:30-11:30am PT for an Ask Us Anything with Chris Lattner and BentoML Founder Chaoyu Yang here in the Modular forum. We’ll answer questions and share more about our plans! Feel free to share your questions now as a reply to this post.

Join us on February 17th at 9:30-11:30am PT for an Ask Us Anything with Chris Lattner and BentoML Founder Chaoyu Yang here in the Modular forum. We’ll answer questions and share more about our plans! Feel free to share your questions now as a reply to this post.

Production inference without the complexity tax.

Get the full details here.